For all attending the AR-Sci – AR for Science Education webinar, references and media can be found below.

About the Delphi method:

Hsu, C., & Sandford, B.A. (2007). The Delphi technique: Making sense of consensus. Practical Assessment, Research & Evaluation 12(10), 1-7McDermott J.H. et al. (1995). A Delphi survey to identify the components of a community pharmacy clerkship. American journal of Pharmaceutical Education, 59(4), 334-341

Osborne, J., Ratcliffe, M., Collins, S., Millar, R. & Duschl, R. (2001). What should we teach about science? A Delphi study. Report from the EPSE research Network.

https://www.york.ac.uk/media/educationalstudies/documents/research/epse/DelphiReport.pdf

* Osborne, J., Collins, S., Ratcliffe, M., Millar, R. & Duschl, R. (2003). What “ideas-about-science” should be taught in school science? A Delphi-study of the expert community. Journal of research in Science Education, 40(7), 692-720

Two reviews about learning technology/AR in science:

Krajcik, J.S. & Mun, K. (2014). Promises and challenges of using learning technology to promote student learning of science. N. Lederman & S. Abell (eds.)Handbook of Research in Science Education, Vol II, p. 337-360

Wu, H., Lee, S.W., Chang, H. & Liang, J. (2013). Current status, opportunities and challenges of augmented reality in education. Computers & Education, 62, 41-49

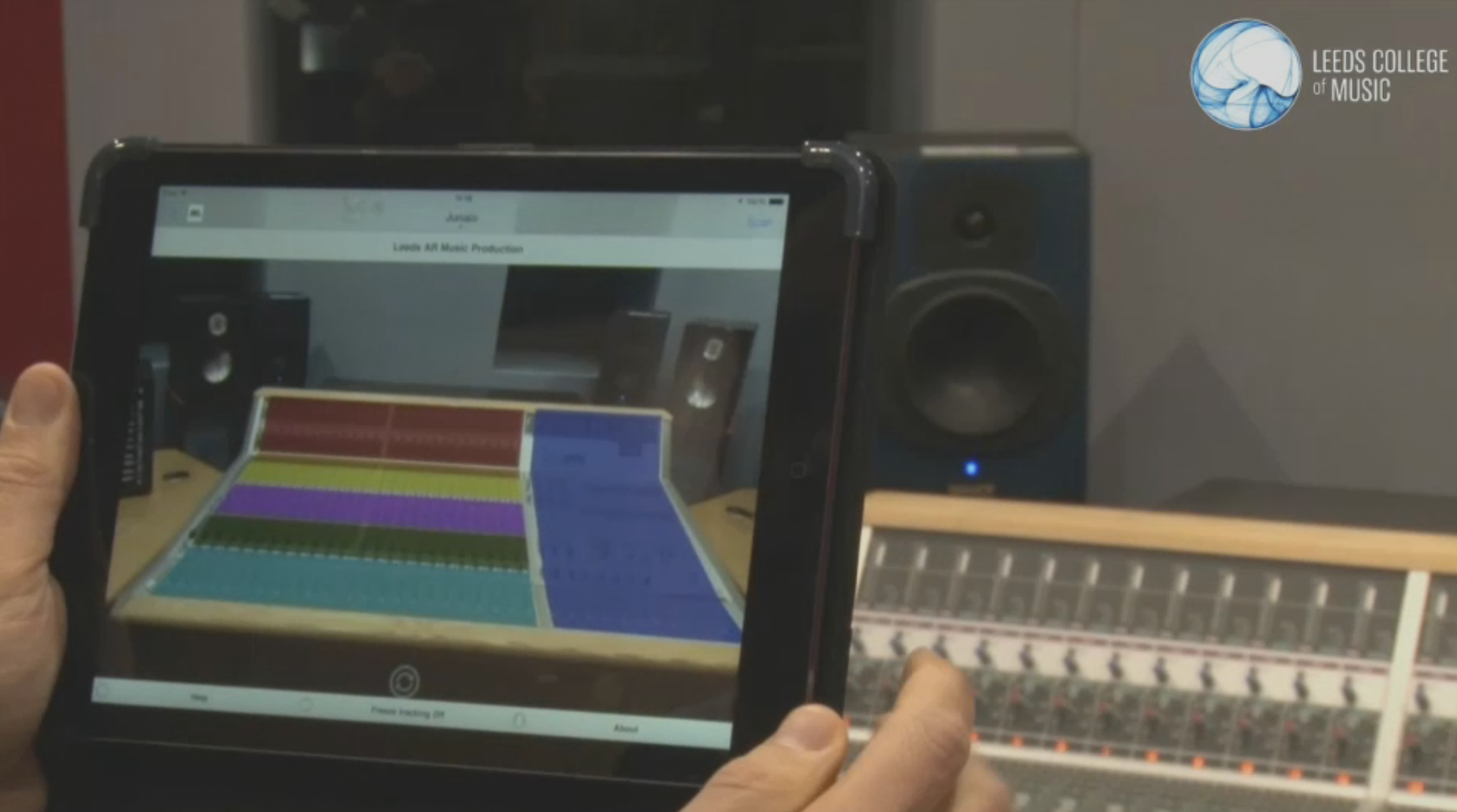

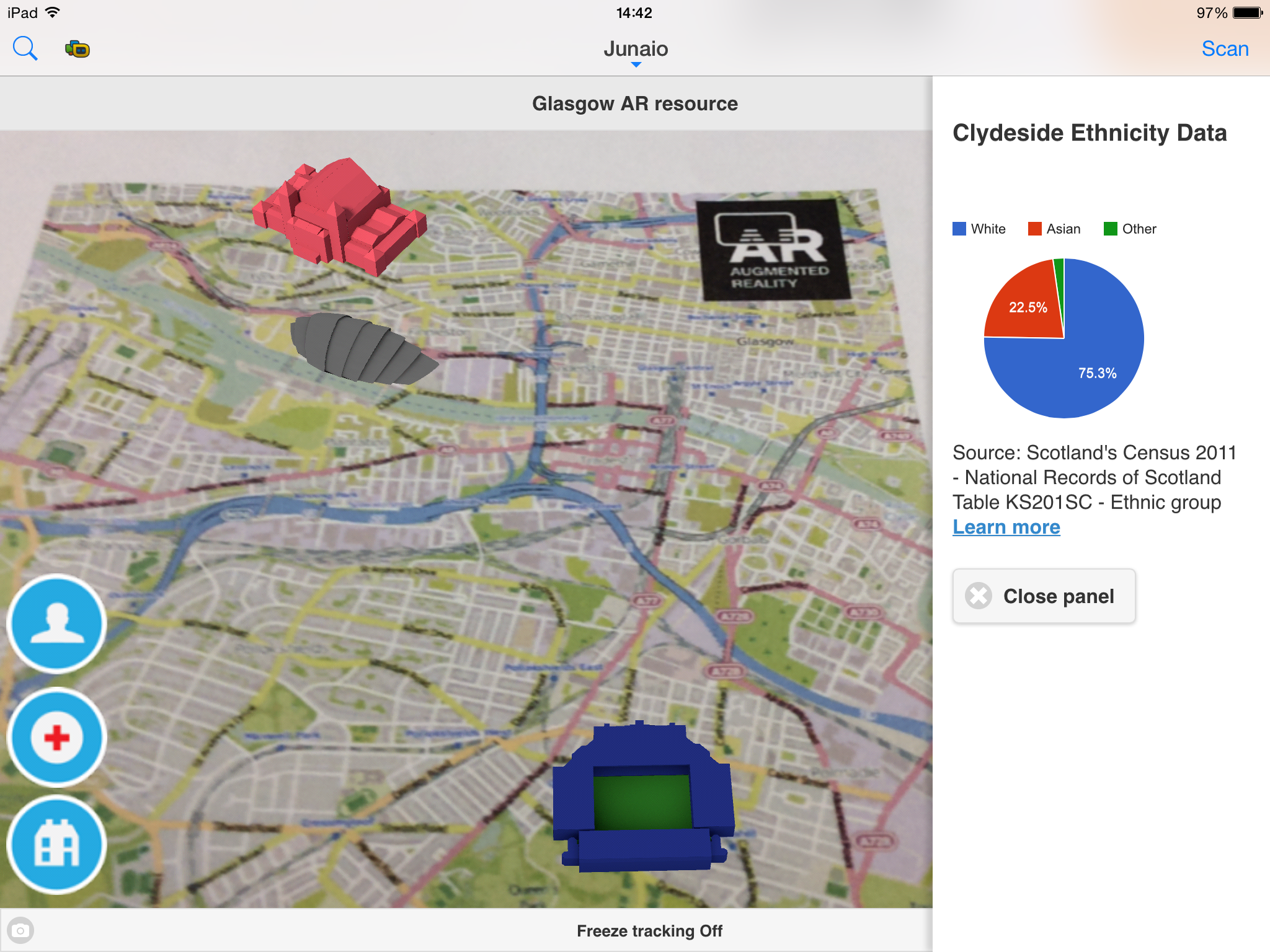

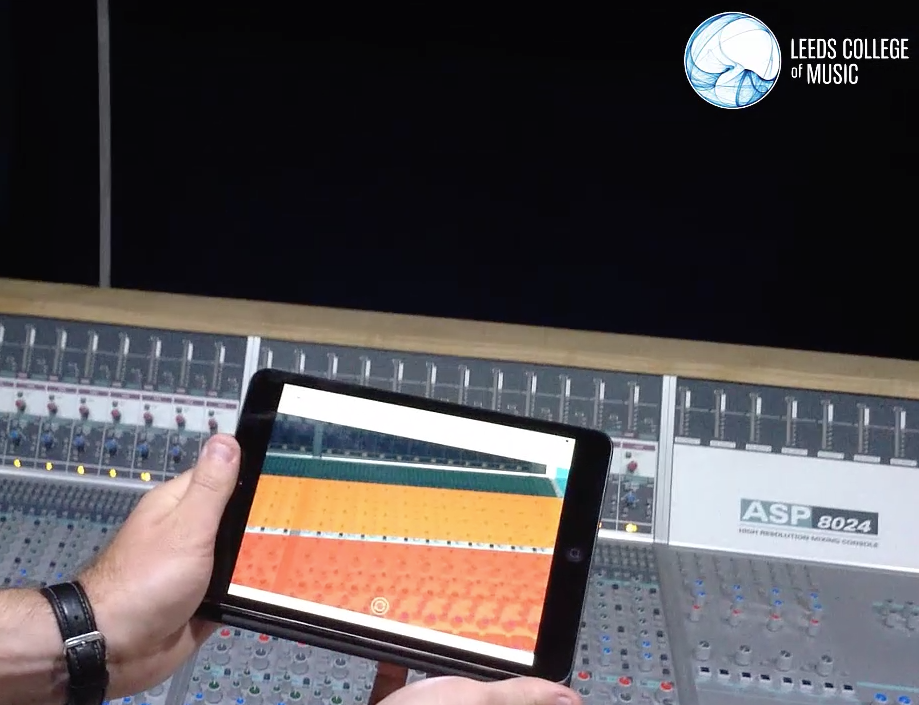

Matt Ramirez – Jisc

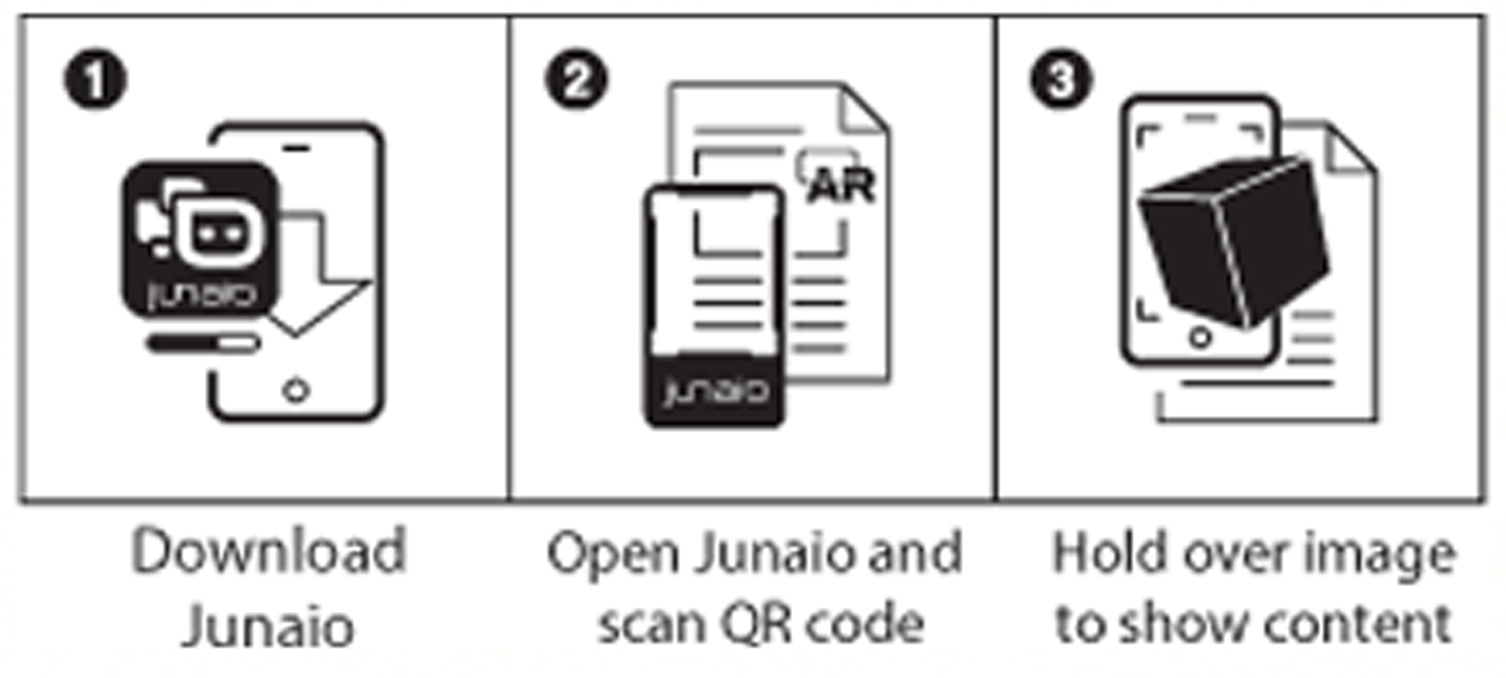

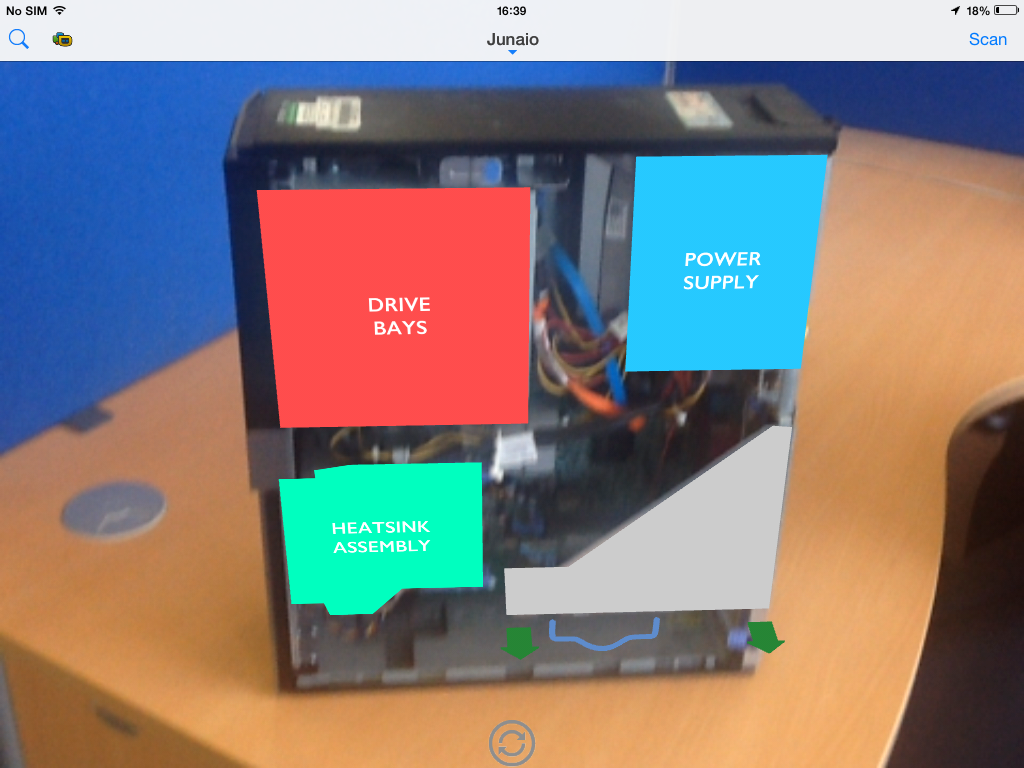

Augmented Reality technology

Media:

“Hud on the cat” by Rama Licensed under Public Domain via Wikimedia Commons –

http://commons.wikimedia.org/wiki/File:Hud_on_the_cat.jpg#/media/File:Hud_on_the_cat.jpg

Metaio

http://www.metaio.com/customers/case-studies/augmented-reality-automotive-prototyping/index.html

Jisc

http://teamscarlet.wordpress.com

Benefits of Augmented Reality in Science Education

Media:

BBC Frozen Planet Augmented Reality

https://www.youtube.com/watch?v=fv71Pe9kTU0

Augmented Reality Sandbox

http://idav.ucdavis.edu/~okreylos/ResDev/SARndbox/

https://youtu.be/Ki8UXSJmrJE

Anatomy 4D

http://daqri.com/project/anatomy-4d/#.VU9WQpM03So

https://youtu.be/ITEsxjnmvow

Elements 4D

http://elements4d.daqri.com/

https://youtu.be/oOdMfgloUD0

Literature:

Cheng, K. H., & Tsai, C. C. (2013). Affordances of augmented reality in science learning: suggestions for future research. Journal of Science Education and Technology, 22(4), 449-462.

FitzGerald, E., Ferguson, R., Adams, A., Gaved, M., Mor, Y., & Thomas, R. (2013). Augmented reality and mobile learning: the state of the art. International Journal of Mobile and Blended Learning, 5(4), 43-58.

Radu, I. (2014). Augmented reality in education: a meta-review and cross-media analysis. Personal and Ubiquitous Computing, 18(6), 1533-1543.

Wu, H. K., Lee, S. W. Y., Chang, H. Y., & Liang, J. C. (2013). Current status, opportunities and challenges of augmented reality in education. Computers & Education, 62, 41-49.