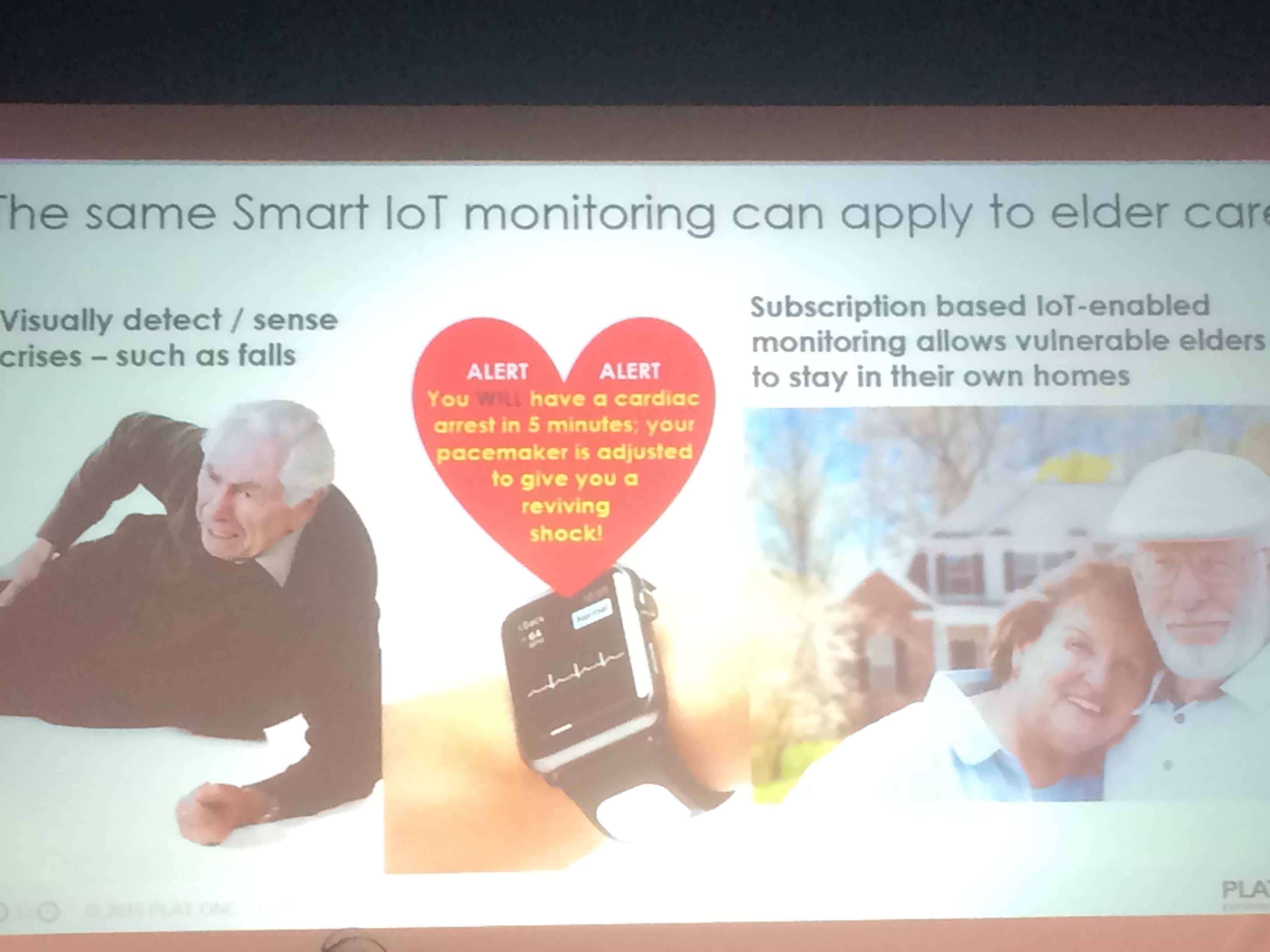

At the recent VR &AR world 2016 that took place in the ExCel centre in London, I was really excited to explore the vibe and experiences around cutting edge technologies that 50 countries came to share from all around the world. With many established companies like Meta, HTC and Epson and many more showingcasing their development in Augmented, Virtual and Mixed Reality, it was exciting to see their latest developments. And while there was still gimmick led content, the majority were aiming to show what added value these technologies can offer. I will concentrate on their potential in the next generation learning environment being informed from my experience visiting VR & AR world 2016 and.

- Sense of presence and the impact on the learner’s identity:

The latest Toyota C-HR VR experience was showcased at the exhibition, it was an experience where you are able to digitally discover and personalise this specific vehicle before it is being released. This time, it was the fact that I haven’t yet tried getting into a car in VR, that sparked my curiosity to try the Toyota experience. Throughout the experience, I was able to walk around the car, open doors, sit inside and change colours and some settings inside the car. However, what astonished me was a feedback that I got from one of the event’s visitors watching the demo of the experience, he said: “yes, it is definitely important to open the door to get off the car”. I stopped for a second thinking about the comment he made.

Sure I felt I was actually in the car, I opened the door when I finished experimenting with the car and wanted to get off. If you look that chair in the picture below, it is obvious that I can get off the chair without the need to open any door.

Toyota C-HR VR expereince

It is funny, yet it is the same thing that makes VR really powerful. This is one of the main things that makes me so passionate about VR and think it has great potential. The sense of presence that VR enables, can make you psychologically feel that you are taking up a physical space within the virtual environment whilst you are physically located in a different place. It is not only bringing to us experiences we never thought we could have again or enabling us to be in places that are may be dangerous or costly to visit otherwise, it is actually enriching our own life experiences. If we think about how many opportunities VR can bring to us, neurally speaking it is contributing to shaping our identity and who we really are: “who we are depends on where we’ve been”.

This is great because with VR the experiences we will be able to try can be limitless and could be more unique as these experiences become more personalised to how we individually interact with it.

I believe that this is going to make tremendous impact on the learners’ experiences and their understanding about the world, as they will be able to reach to things and explore places far beyond their physical locations. Couldn’t this be transforming in the next generation learning environment to overcome limitations of travel to learn about specific places or particular period of time, and furthermore enabling the learners to get different perspectives.

Toyota uses the HTC Vive in this demo which is a favoured VR tech, and it was occupying the floor in the event due to its super accuracy, a lot faster and with little latency compared to other VR headsets.

In an earlier blog post, Matthew Ramirez talked about the techniques and tricks that the vive deployed to enhance the immersion and the sense of presence in VR overcome some problems with other headsets like nausea.

- Virtual Reality and Mixed Reality in Maintenance and Engineering:

If we take this example even further, Epson, Vuzix and Meta has been developing their own smart/ mixed reality glasses for the automotive industry and construction. Colleges and vocational courses are the ones that could benefit from these technologies the most particularly to facilitate and smooth the transition between colleges, apprenticeships, and real life jobs. With VR, AR and MR students could be more prepared for solving real life problems as they could develop many of the required skills when working with cutting edge tech in a very safe and constructive learning spaces.

This in itself could empower learners and particularly girls to get them into engineering and technology careers when their lack of confidence resulted in shortage in the skills required for STEM related jobs as reported in the Guardian and the BBC news. Stimulating experiences that engage students in problems solving and challenging activities with the use VR and AR could help unlock the learners’ creativity to come up with solutions in a flexible and safe environment. They are able to make mistakes and get feedback, and this could increase girls’ confidence in their abilities and affecting their career choices.

This is a video on using Microsoft HoloLense Mixed Reality in architecture which I believe is revolutionary as it will play a vital role in rethinking design, construction and engineering for the next generation learners of much needed engineers and designers.

- Virtual Reality and Storytelling:

Another attractive point to me in the event floor was more relevant to the content and somehow the simple technology. the London’s Stereoscopic Company (LSC) who has a long reputation of publishing 3D stereoscopic images from Victorian times to the modern day is now adopting VR to bring their digital 3D stereoscopic content and films in a more exciting way and making them available to anyone with a smart phone. It is bringing historical periods of time, books, collections of original images, VR films to us using a small low cost VR kit as you can see in the image.

I immediately thought that this a great medium for storytelling that could turn telling a story in a classroom to a more fun and flexible way.

OWL VR KIT

You might argue with this sort of simple kit you do not get the same high-end experience than with the more expensive VR headsets, however, I believe for entry level users or even kids, it could be a great way to bring information that is only available in texts in books or cards to life through VR and Digital 3D content: “When this content is viewed in stereo the scene leaps into 3-dimensional life” as Brian May describe it. I enjoyed having access to the fascinating world of Stereoscopy they have through the cards as it makes me feel like a child again.

This is OWL VR KIT viewer for any 3D content and VR films, it comes as a box designed with high quality focusable optics.

This sort of kits like the Google card board as well is allowing more people to at least have this sort of first experience with VR and so increasing the number of people who are more savvy and more educated about what VR could potentially offer them.

My take from this example is that the technology itself cannot guarantee a fun and interesting experience alone. What we need to have is more compelling stories, stories that trigger emotions and that sense of wonder we all had when we were children, these are the sort of things that make VR valuable as a storytelling medium.

4. Haptic feedback in VR and what is next?

In the event, I tried for the first time a form of haptic feedback using a hand-held controller that allows you to feel some sort of force while interacting with virtual objects on the screen. This sensible’ phantom haptics system is one of the machines VR and AR companies are experimenting with to explore what possibilities of real-like feedback a doctor could have when puncturing their patients’ skins. So the system acts as a handle to virtual objects providing stable force feedback for a single point in space.

Hand-held controller

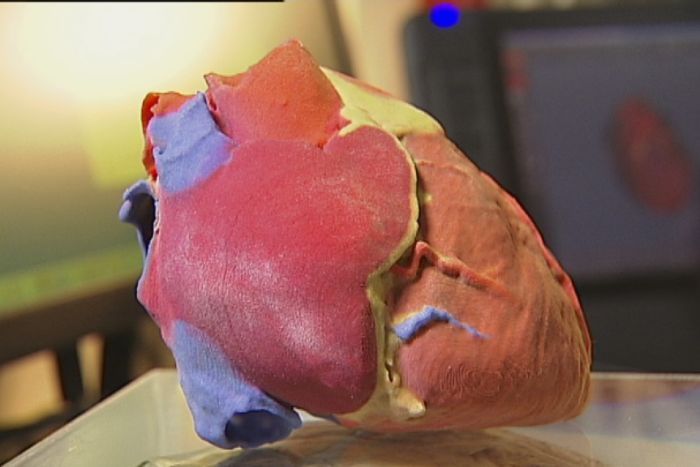

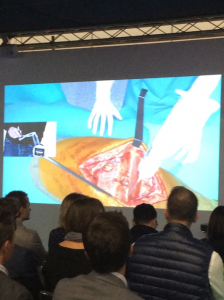

I imagine this is definitely going to be essential to any VR and AR experience. We all use our hands intuitively to reach out for things, to interact with things and people, and to feel things. In this example of using the controller in remote surgery training, haptic feedback has been used and it contributed to create an illusion so trainees felt more present in the remote surgery room. This is contributing to make the surgeons or trainee doctors experience more believable enabling them to understand and perform tasks far more effectively and safely. For me, as haptic and tactile technologies advance and become integrated into more of these experiences, particularly when led by cognitive neuroscience research on how we interact with the world, the quality of education with VR becomes increasingly high. Medical students will then have great opportunities experiencing and operating in more real-life surgery situations safely and constructively. Haptic feedback could also be a way of better informing students in the decision-making process.

Surgery in VR with Haptic

The opportunities for using that in education are great to perform tasks more efficiently and effectively are great. Chris Chin the founder of HTC vive is expecting in his interview that education and medical experiences with VR are coming next in 2017, will that be with some advances in the Haptic technology? Shouldn’t we prepare learners for these new delivery methods or at least prepare the current learning environment to be equipped to deliver and support these sort of experiences?

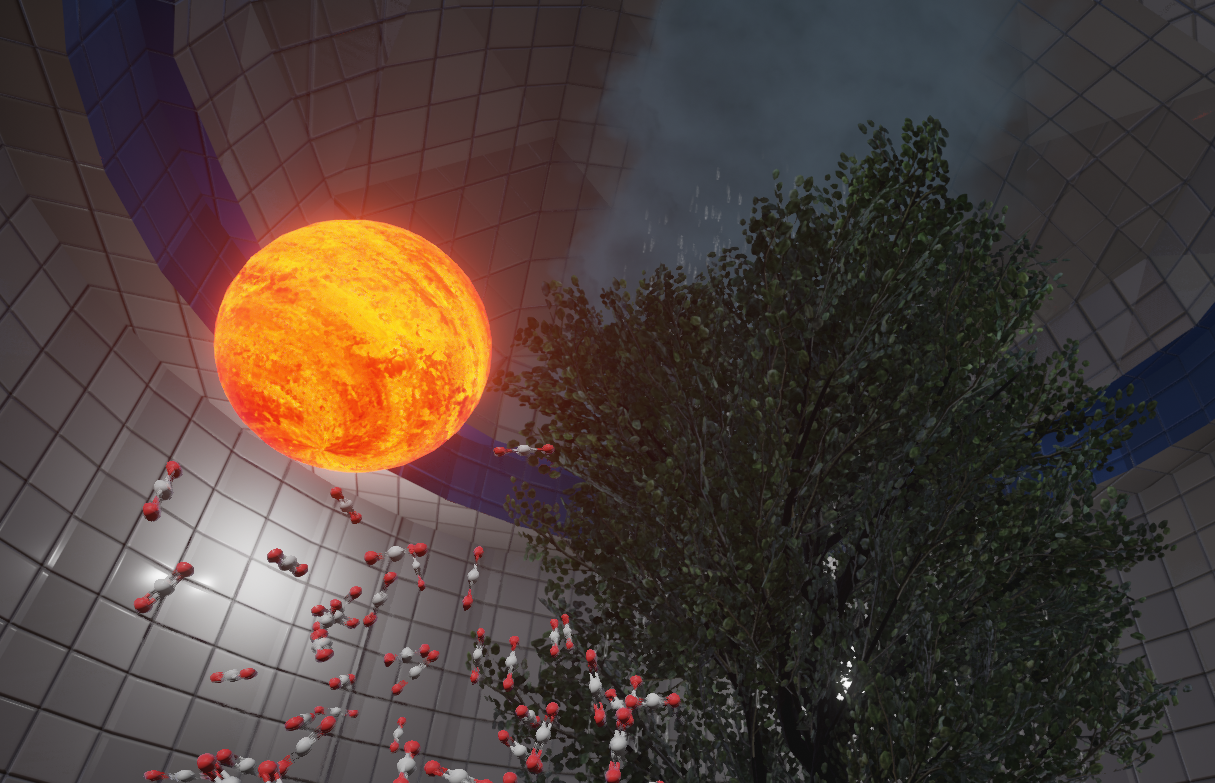

Here are a few examples of our work at Jisc in Augmented Realuty and Virtual Reality to immerse, engage and eduate learners, if you are interested in embarking on your own project or need help, we’d love to share experiences with you.