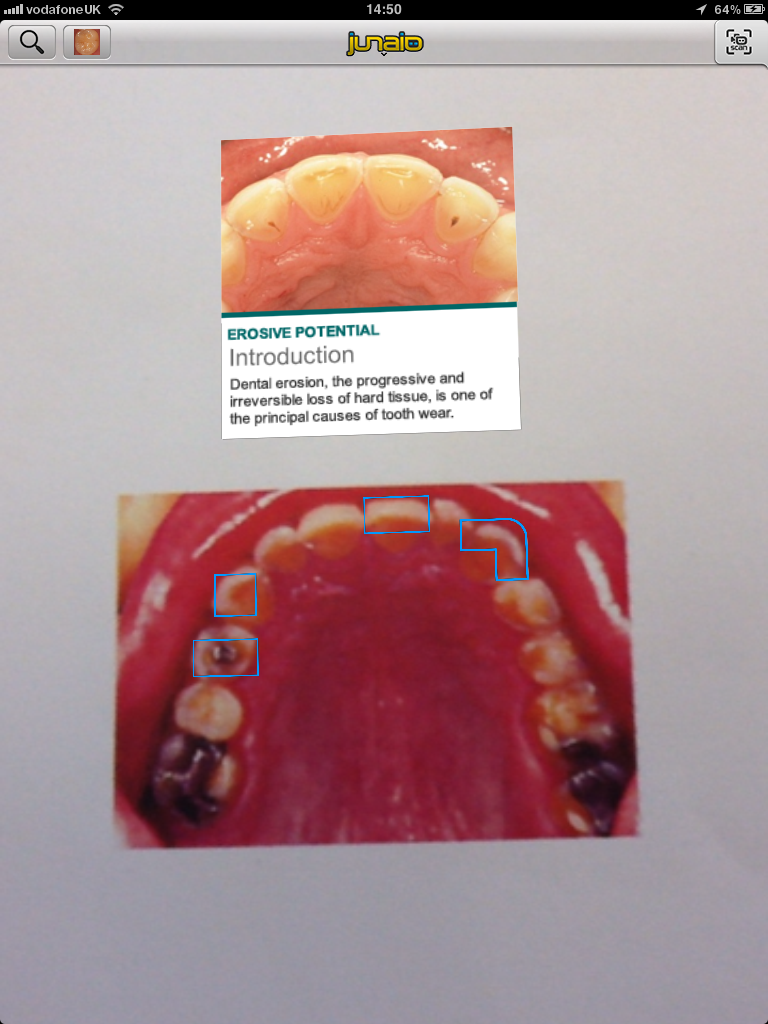

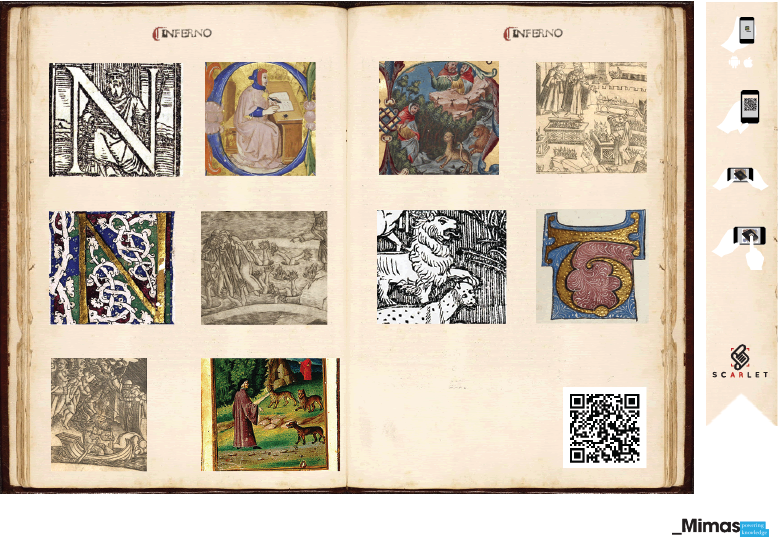

The image above has been used as the pattern for the Crafts Study Centre’s first proof-of-concept augmented reality (AR) app. The idea was born from conversations between Jean Vacher, Curator, Crafts Study Centre (CSC) and Adrian Bland, Contextual Studies Co-ordinator, School of Media and Culture, University for the Creative Arts (UCA); and centered around the concept of the door to The Little Gallery being a portal to another world. My role as Educational Technologist has been to bring this to reality… Augmented Reality!

SCARLET+ Technical workshop, 1st October 2012

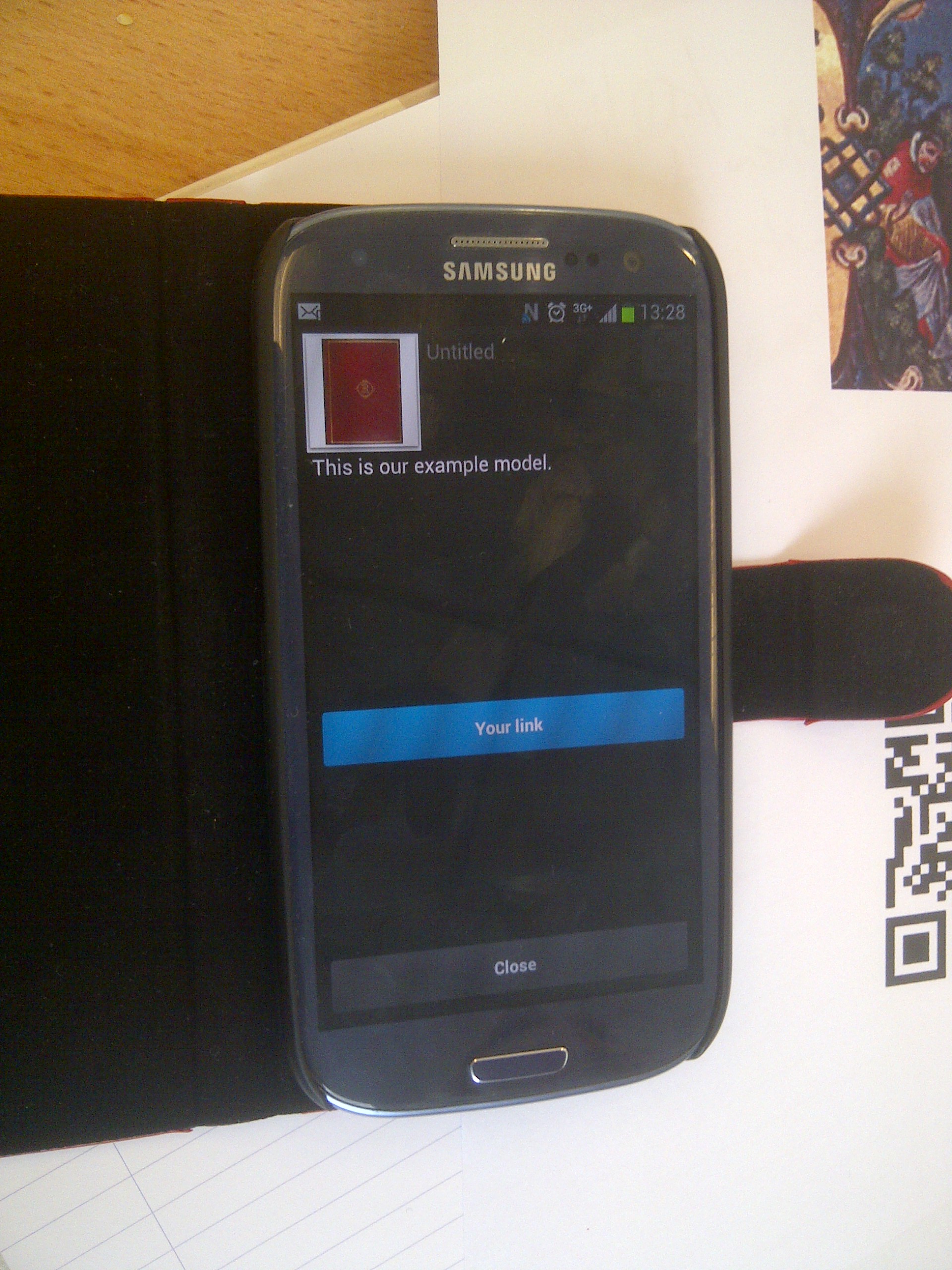

Having started on the project in September I began with familiarising myself with AR. The Technical workshop was the first opportunity to create a working example of AR using Junaio and test it in practice.

See also: great blog posts from Rose and Matt about the technical workshop http://teamscarlet.wordpress.com/2012/10/05/scarlet-coding-for-the-terrified-or-how-i-learned-to-stop-worrying-and-love-php-3/ and http://teamscarlet.wordpress.com/2012/10/05/scarlet-technical-workshop/.

Technical support from MIMAS

Jean tasked me with providing a proof-of-concept and in my enthusiasm I thought it wouldn’t take long, however these were the lessons learned from the longer-than-expected process:

- Unlike building a website in HTML, the work can only be tested properly on the live server i.e. no live site = no demo.

- Creating a Junaio channel with callback URL is actually relatively straightforward, but explaining your technical requirements can be difficult as a newbie to AR. Matt answered detailed questions from our IT department in order to set-up what was essentially ‘a folder’.

- IT resourcing issues need to be addressed before the new academic year; despite completing a detailed work request, this was turned down by our IT department. A sliver lining was my connection with the Visual Arts Data Service (VADS) which led to them kindly hosting the folder for us.

- FTP access is required in order to build an AR application. It is essential to have FTP access to your folder as tweaks with the code need to be made throughout testing on the live server.

- Equipment – this raises interesting issues with inclusivity for students using AR in education – both Jean and I have had to borrow devices from family in order to test the AR content. Devices can be iPad2 (and above), iPhone3 (and above), and Android devices (untested by us so far).

- Internet – the Junaio application requires a good connection to work on the device, and the eduroam network has not always obliged.

- Why AR? AR is undoubtedly exciting with a bit of a thrill of the new in education, however that makes it even more important to put pedagogy before technology. By working closely with an academic to tailor something to his students’ needs, we hope to keep the pedagogy at the fore-front of AR.

- Technical support from MIMAS through Matt’s role in the SCARLET+ project was essential.

Glossary

- callback URL – this is the weblink to your AR content and specifically to the ‘html’ folder, an example URL would be http://www.website.ac.uk/dev/scarlet/html

- channel – this is a location where Junaio will make your content available to others – users can use a QR code or a URL to access your channel and therefore access the AR content

- FTP access – File Transfer Protocol – permissions on the folder need to be set-up so AR developers can transfer files from a local machine to the live folder

- Junaio – an augmented reality browser that you can download for free on a range of devices in order to access both location-based and static (GLUE) AR

- pattern – the pattern is also called your ‘tracking image’ by Junaio, after a user has scanned your AR channel they need to find the ‘pattern’ or ‘tracking image’ this is the trigger for your AR content which will appear on top of this

- portal to another world – this concept is mentioned briefly above, a forthcoming blog post will consider how we might enable this through AR